Abstract

Quantitative grading of facial paralysis (FP) and the associated loss of facial function are essential to evaluate the severity and to track deterioration or improvement of the condition following treatment. To date, several computer-assisted grading systems have been proposed but none have gained widespread clinical acceptance. There is still a need for an accurate quantitative assessment tool that is automatic, inexpensive, easy to use, and has low inter-observer variability. The aim of the authors is to develop such a comprehensive Automated Facial Grading (AFG) system. One of this system’s modules: the resting symmetry module has already been presented. The present study describes the implementation of the second module for grading voluntary movements. The system utilizes the Kinect v2 sensor to detect and capture facial landmarks in real time. The functions of three regions, the eyebrows, eyes and mouth, are evaluated by quantitatively grading four voluntary movements. Preliminary results on normal subjects and patients are promising. The AFG system is a novel system that is suitable for clinical use because it is fast, objective, easy to use, and inexpensive. With further enhancement, it can be extended to become a virtual facial rehabilitation tool.

Highlights

- Develop a comprehensive Automated Facial Grading (AFG) system.

- The AFG system is suitable for clinical use because it is fast, easy to use, inexpensive and has no inter-observer variability.

- The system can be used for both bilateral and unilateral Facial Paralysis.

- Transfer the subjective clinical traditional methods like "House-Brackmann" to an objective automatic quantitative assessment.

1. Introduction

Facial paralysis (FP) is a condition which affects both facial symmetry and function. It can be congenital or acquired due to various causes for instance following nerve injury [1, 2]. FP patients are affected to different degrees, with symptoms possibly including speech impairment, the inability to drink and eat normally [1, 3] and the inability to perform facial expressions correctly [2]. Hence there is need for a quantitative clinical grading tool to evaluate the severity of the FP condition and to quantify the loss of facial function as well as the effectiveness of medical treatment or rehabilitation.

In the past several decades, a large number of facial grading systems have been proposed and are comperehensively reviewed in [1, 3, 4]. They are divided into two main categories; traditional systems and computer-assisted grading systems [3]. Most of the traditional grading systems such as House-Brackmann, Nottingham, and Sunnybrook systems are based on comparing distances between specific facial landmarks at rest and during performing various facial exercises. Although these systems are widely used and accepted by clinicians, they have several limitations. In general, they are subjective because their scoring criteria depend on the physician’s observations and measurements. Furthermore, most of them require significant time for identifying and manually marking facial landmarks.

In recent years, several computer-assisted facial grading systems have been proposed [1, 3] and are comprehensively reviewed in [5, 6]. They include video recording, Photoshop [7], image processing and classification approaches to provide objective, quantitative, and repeatable assessment of facial function. One such system is SmartEye Pro-MME, it tracks lip movements after identifying and capturing lip landmarks [8]. The Recognition of Smile Excursion (RoSE) scale uses face photos to automatically evaluate the symmetry of the smile in FP patients [9]. However, for both systems, the physician has to select the landmarks of interest manually and the program automatically calculates the scores [8]. Although these computer-assisted grading systems provide rapid repeatable results in a quantitative form, their general acceptance as a clinical tool is limited by their requirements of high cost specialized equipment as well as the lengthy time required to manually identify landmarks on the patient’s face or photo.

More recently, 3D depth sensors, generally used for motion detection in commercial games, have started being used as a clinical tool for motion detection in medical applications. Recent reviews show that 3D depth sensors like the Microsoft Kinect® [10] have been used in musculoskeletal virtual rehabilitation systems [11-13]. A small number of studies have used the Kinect depth sensor for face applications. These include the recognition of basic facial expressions [14] and the classification of different facial exercises [15]. In U.K., ongoing research uses the Kinect depth sensor for facial rehabilitation of patients following stroke [16].

The work presented here lies within the framework of a comprehensive Automated Facial Grading (AFG) system initially presented by the authors in [17, 18] which employs the Kinect v2 (Microsoft Inc., USA) and the SDK 2.0 for automatic capturing of facial landmarks. The AFG system includes three different assessment modules; resting symmetry grading, voluntary movements’ grading, and standard grading scales. The resting symmetry assessment module was presented in [17] while its modified extension was described in [18]. The current work focuses on developing and testing the voluntary movements’ assessment module. The AFG system is fast, independent of user subjectivity and cost effective enough for widespread clinical use.

2. Methods

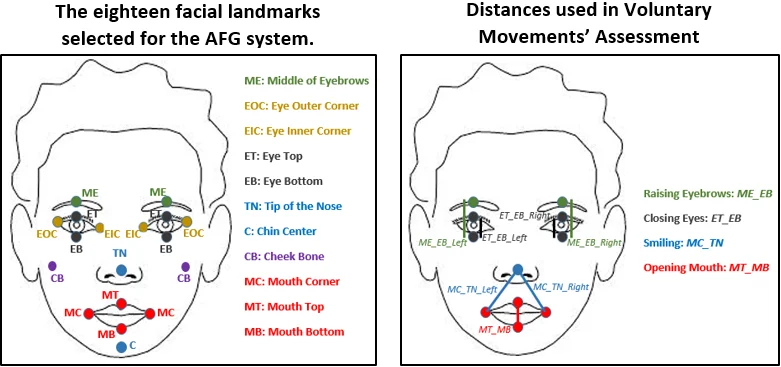

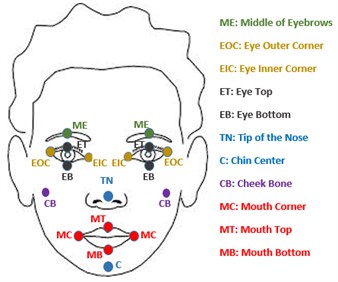

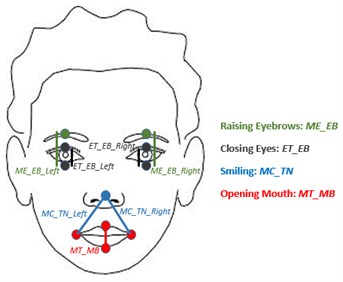

The assessment module presented here is used for grading the function of three facial features; eyebrows, eyes, and mouth while performing four selected facial exercises; raising eyebrows, closing eyes, smiling and opening the mouth. The AFG system automatically captures the facial landmarks in real time using the Kinect v2 as previously described [17, 18]. The eighteen facial landmarks selected are shown in Fig. 1. The distances between landmarks used for voluntary movements’ grading are shown in Fig. 2 and the processing methodology is described below.

Fig. 1The eighteen facial landmarks selected for the AFG system

Fig. 2Distances used in voluntary movements’ assessment

2.1. Determining normal landmark displacements

In order to determine the maximum normal displacements of the eyebrows when raised, the eyes when closed, the mouth when opened, and the corners of the mouth when smiling; twenty normal subjects were recruited. All the subjects signed an informed consent form. Subjects were instructed to perform each movement to their maximum ability and the displacements of landmarks were recorded. The change in the distance MEEB from rest to maximum raising was found to have a mean and standard deviation of 0.55±0.07 cm. The maximum change in the distance ETEB from rest to closing the eyes was found to have a mean and standard deviation of 0.35±0.03 cm. The change in the distance MCTN from rest to maximum smiling was found to have a mean and standard deviation of 0.6±0.08 cm while the maximum difference in the distance MTMB from rest to mouth opening was found to be 4±0.3 cm.

2.2. AFG voluntary movements grading

2.2.1. Raising the eyebrows

How much the eyebrow is raised is calculated from the change in the distance MEEB as described in Eq. (1) for both left and right sides independently. A score of 1 % is assigned for each 0.0055 cm increment in the distance such that achieving the average normal distance of 0.55 cm receives the maximum score of 100 %. In addition, a grade of 1 to 5 is given corresponding to each 0.11 cm movement:

2.2.2. Closing the eyes

Assessing the performance of closing the eyes depends on the change in the distance ETEB as shown in Fig. 2. How much the each eye is closed is calculated from Eq. (2):

A score of 1 % is assigned for each 0.0035 cm difference in the distance ETEB.

2.2.3. Smiling

Evaluating the performance of smiling is based on the distance MCTN as shown in Fig. 2. How much the subject is smiling is calculated from Eq. (3):

A 1 % score is assigned for each 0.006 cm increase in the distance MCTN with a maximum of 100 % when the difference in distance reaches 0.6 cmfrom the resting value. In addition, a grade of 1 to 5 is given corresponding to each 0.12 cm movement.

2.2.4. Opening the mouth

Assessing the performance of opening the mouth depends on the change in the distance MTMB shown in Fig. 2 and calculated in Eq. (4):

3. Results

As an initial proof of concept, grading of the four voluntary facial functions using the AFG system was tested on six normal subjects and two facial paralysis patients. In order to simulate facial paralysis, each normal subject was instructed to perform the four functions in a specific way not necessarily to their full ability. The scores and the corresponding grades were computed for each side independently and are shown in Table 1. We have validated the system by testing on the two FP patients and correlating the evaluation with their clinical assessment. The experimental procedures involving human subjects described in this paper were approved by the Neurophysiology department council, El Kasr El-Aini, Faculty of Medicine, Cairo University. Facial paralysis patients participated in this research have to sign a patient consent form.

Table 1Assessment score of eight subjects using the AFG system

Subject | Normal/ FP patient | Unit of grading | Raising eyebrows | Closing eyes | Smiling | Opening mouth | |||

L | R | L | R | L | R | ||||

#1 | Normal | Perform the movement? | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

Score (%) | 93 % | 99 % | 100 % | 86 % | 100 % | 84 % | 100 % | ||

Grade (0-5) | 5 | 5 | 5 | 5 | 5 | 5 | 5 | ||

#2 | Normal | Perform the movement? | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

Score (%) | 92 % | 94 % | 100 % | 73 % | 100 % | 100 % | 98 % | ||

Grade (0-5) | 5 | 5 | 5 | 4 | 5 | 5 | 5 | ||

#3 | Normal | Perform the movement? | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

Score (%) | 68 % | 69 % | 89 % | 85 % | 100 % | 90 % | 97 % | ||

Grade (0-5) | 4 | 4 | 5 | 5 | 5 | 5 | 5 | ||

#4 | Normal | Perform the movement? | No | No | No | No | No | No | No |

Score (%) | 04 % | 03 % | 04 % | 00 % | 00 % | 05 % | 03 % | ||

Grade (0-5) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||

#5 | Normal | Perform the movement? | No | No | No | No | No | No | No |

Score (%) | 01 % | 00 % | 00 % | 02 % | 03 % | 04 % | 01 % | ||

Grade (0-5) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ||

#6 | Normal | Perform the movement? | Yes | Yes | Yes | No | Yes | No | Yes |

Score (%) | 80 % | 69 % | 52 % | 03 % | 69 % | 00 % | 34 % | ||

Grade (0-5) | 5 | 4 | 3 | 0 | 4 | 0 | 2 | ||

#7 | Patient | Perform the movement? | Yes | Yes | Yes | No | No | No | Yes |

Score (%) | 56 % | 32 % | 28 % | 0 % | 0 % | 0 % | 31 % | ||

Grade (0-5) | 3 | 2 | 2 | 0 | 0 | 0 | 2 | ||

#8 | Patient | Perform the movement? | Yes | Yes | No | Yes | No | No | Yes |

Score (%) | 20 % | 32 % | 00 % | 28 % | 0 % | 0 % | 58 % | ||

Grade (0-5) | 2 | 2 | 0 | 2 | 0 | 0 | 3 | ||

Test subjects 1 and 2 were instructed to perform the four facial voluntary movements to their maximum ability and the results show high scores for both sides. Subject 3 was instructed not to raise his eyebrows fully and his results show a grade of 4 for both the right and left sides. Subjects 4 and 5 were instructed not to perform any movement and their scores are around zero in all the movements for both sides. Subject 6 was instructed to close the left eye only and achieved a score of 03 % for the right eye. He was also instructed to move only the left side while smiling and hence his score was 00 % in right side smiling. In addition, he only slightly opened the mouth, and his score was 34 %.

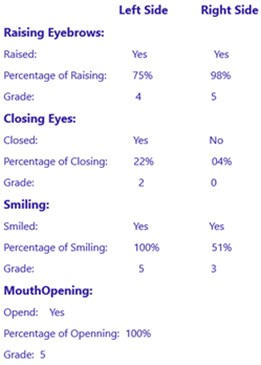

Test subject 7 is a 52 years old FP Patient. Electromyogram (EMG) examination and nerve conduction studies showed evidence of complete lesion affecting the right facial nerve with no evidence of regeneration. When he was asked to perform the four exercises using our AFG system, he raised his eyebrows and opened his mouth in a moderate rate as shown in Table 1. But he could not close his right eye, it was always opened. He was suffering a severe right FP as diagnosed using needle EMG. Test subject 8 is a 58 years old FP Patient. EMG examination showed evidence of severe partial lesion affecting the left facial nerve. When she was examined using our AFG system, she slightly raised her eyebrows and opened her mouth. Her left eye was opened, she could not close it. It was diagnosed as a severe left FP patient. A sample report is shown in Fig. 3. For each of the four movements, it shows both the percentage of successful accomplishment of the task as well as the grade for the left and right sides individually.

4. Discussion

Although several computer based systems for FP grading have been presented in the literature [6, 7], there is still no system which has been widely accepted by clinicians. Such a system should be accurate, quantitative, automated, and independent of user variability. Moreover, it should be fast, easy to use, and inexpensive. The assessment module designed and implemented in this study is part of ongoing work to create a comprehensive AFG system as previously reported by the authors [17, 18]. This module grades the function of three facial features; eyebrows, eyes, and mouth while performing four selected facial exercises; lifting the eyebrows, closing the eyes, smiling and opening mouth. The first three movements are found in traditional scales. However, opening the mouth is an additional movement included here to better assess mouth function.

Fig. 3Voluntary movement assessment report generated using the AFG System

Automated capture of the 3D locations of eighteen facial landmarks in real time using the Kinect for Windows v2 eliminates the need for manually placing markers on the subject’s face as was required in other grading systems [19]. In addition, it eliminates the need for time-consuming feature recognition and classification algorithms which have been used in other computer-assisted systems [6]. Another significant advantage of the AFG system is that the distances computed do not depend on facial orientation or tilting.

Basing the grading of FP on distances between landmarks is one of the standard accepted methods used in traditional grading systems. However, in the AFG system, distances are computed automatically, accurately and objectively within a very short period of time. It should be noted that evaluating the smiling function is not based simply on the distance between the two mouth corners. It is actually based on the distances between each mouth corner and the tip of the nose as shown in Fig. 2. This has the advantage of reporting the excursion of each side of the mouth individually. In addition, referring the mouth corners to a fixed facial landmark avoids the possibility of erroneous results resulting from mouth distortion in FP patients.

Preliminary testing of the AFG system was done in six normal subjects and two FP patients. The small number of test subjects is a limitation of this study. However, it showed promising results as shown in Table 1 and there is ongoing work to test it on more FP patients. The detailed report shown in Fig. 3 gives quantitative grades for the four functions for both the right and left sides independently. This allows the AFG system to be used for both unilateral and bilateral FP. This work is still in progress. More landmarks points and additional voluntary movements are being added to the system.

5. Conclusions

This work describes the design, implementation, and testing of the voluntary movements grading module of a comprehensive AFG system for FP. The system utilizes the Kinect v2 sensor to detect and capture eighteen facial landmarks in real time without the need to place markers on the subject’s face. The functions of three facial regions, the eyebrows, eyes and mouth, are evaluated by quantitatively grading four voluntary movements: raising the eyebrows, closing the eyes, smiling, and opening the mouth. Grading is based on movement of landmarks during each exercise relative to their resting positions and scores are assigned as percentages of normal limits of motion. The left and right sides are graded independently allowing the AFG system to be used for both bilateral and unilateral FP. Preliminary results are promising and testing on more FP patients is being performed. The AFG system is suitable for clinical use because it is fast, easy to use, low cost, quantitative, and automated.

References

-

Sundaraj K., Samsudin W. S. Evaluation and grading systems of facial paralysis for facial rehabilitation. Journal of Physical Therapy Science, Vol. 25, Issue 4, 2013, p. 515-519.

-

Bogart K., Tickle Degnen L., Ambady N. Communicating without the face: holistic perception of emotions of people with facial paralysis. Basic and Applied Social Psychology, Vol. 36, Issue 4, 2014, p. 309-320.

-

Zhai M.-Y., Feng G.-D., Gao Z.-Q. Facial grading system: physical and psychological impairments to be considered. Journal of Otology, Vol. 3, Issue 2, 2008, p. 61-67.

-

Kanerva M. Peripheral Facial Palsy: Grading, Etiology, and Melkersson-Rosenthal Syndrome. Ph.D. Thesis, University of Helsinki, 2008.

-

Mishima K., Sugahara T. Review article: analysis methods for facial motion. Japanese Dental Science Review, Vol. 45, 2009, p. 4-13.

-

Tzou C. H., et al. Evolution of the 3-dimensional video system for facial motion analysis. Annals of Plastic Surgery, Vol. 69, Issue 2, 2012, p. 173-185.

-

Pourmomeny A. A., Zadmehr H., Hossaini M. Measurement of facial movements with Photoshop software during treatment of facial nerve palsy. Journal of Research in Medical Sciences, Vol. 16, Issue 10, 2011, p. 1313-1318.

-

Sjogreen L., Lohmander A., Kiliarids S. Exploring quantitative methods for evaluation of lip function. Journal of Oral Rehabilitation, Vol. 38, Issue 6, 2011, p. 410-422.

-

Rose E., Taub P., Jablonka E., Shnaydman I. RoSE Scale (Recognition of Smile Excursion), http://www.ip.mountsinai.org/blog/rose-scale-recognition-of-smile-excursion/.

-

Kinect for Windows. Microsoft, http://www.microsoft.com/enus/kinectforwindows/meetkinect /features.aspx.

-

Gaber A., Taher M. F., Wahed M. A. A comparison of virtual rehabilitation techniques. International Conference on Biomedical Engineering and Systems, 2015.

-

Freitas D. Q., Da Gama A. E. F., Figueiredo L., Chaves T. M. Development and evaluation of a Kinect based motor rehabilitation game. Proceedings of SBGames, 2012.

-

Norouzi Gheidari N., Levin M. F., Fung J., Archambault P. Interactive virtual reality game-based rehabilitation for stroke patients. IEEE International Conference on Virtual Rehabilitation, 2013.

-

Youssef A. E., Aly S. F., Ibrahim A. S., Abbott A. L. Auto-optimized multimodal expression recognition framework using 3D Kinect data for ASD therapeutic aid. International Journal of Modeling and Optimization, Vol. 3, Issue 2, 2013, p. 112-115.

-

Lanz C., Olgay B. S., Denzl J., Gross H.-M. Automated classification of therapeutic face exercises using the Kinect. Proceedings of the 8th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Barcelona, Spain, 2013.

-

Breedon P., et al. First for Stroke: using the Microsoft ‘Kinect’ as a facial paralysis stroke. International Journal of Integrated Care, Vol. 14, Issue 8, 2014, http://doi.org/10.5334/ijic.1760.

-

Gaber A., Taher M. F., Wahed M. A. Automated grading of facial paralysis using the Kinect v2: a proof of concept study. International Conference on Virtual Rehabilitation, Valencia, 2015.

-

Gaber A., Taher M. F., Wahed M. A. Quantifying facial paralysis using the Kinect v2. International Conference of the IEEE Engineering in Medicine and Biology Society, Milan, 2015.

-

Ater Y., Gersi L., Glassner Y., Byrt O. Mobile Application for Diagnosis of Facial Palsy, 2014.