Abstract

This paper proposes to estimate the complexity of a multivariate time series by the spatio-temporal entropy based on multivariate singular spectrum analysis (M-SSA). In order to account for both within- and cross-component dependencies in multiple data channels the high dimensional block Toeplitz covariance matrix is decomposed as a Kronecker product of a spatial and a temporal covariance matrix and the multivariate spatio-temporal entropy is defined in terms of modulus and angle of the complex quantity constructed from the spatial and temporal components of the multivariate entropy. The benefits of the proposed approach are illustrated by simulations on complexity analysis of multivariate deterministic and stochastic processes.

1. Introduction

Spatially extended complex dynamical systems, common in physics, biology and the social sciences such as economics, contains multiple interacting components and is nonlinear, non-stationary, and noisy, and one goal of data analysis is to detect, characterize, and possibly predict any events that can significantly affect the normal functioning of the system [1]. A lot of analysis techniques, originated from synchronization theory, nonlinear dynamics, information theory, statistical physics, and from the theory of stochastic processes, is available to estimate strength and direction of interactions from time series [2]. Among other techniques, the entropy measures are a powerful tool for the analysis of time series, as they allow describing the probability distributions of the possible state of a system, and therefore are capable to map changes of spatio-temporal patterns over time and detect early signs of transition from one to another regime. Various practical definitions and calculations of entropy exist [3-6], but most of them have been designed predominantly for the scalar case and are not suited for multivariate time series that are routinely measured in experimental and biological systems. Hence, suitable tools for the detection and quantitative description of the rich variety of modes that can be excited due to the interplay between spatial and temporal behavior are needed. Multivariate multiscale entropy (MMSE) has been recently suggested as a novel measure to characterize the complexity of multivariate time series [4]. The MMSE method involves multivariate sample entropy (MSampEn) and allows to account for both within- and cross-channel dependencies in multiple data channels. Furthermore, it operates over multiple temporal scales. The MMSE method is shown to provide an assessment of the underlying dynamical richness of multivariate observations and has more degrees of freedom in the analysis than standard MSE [4]. However, this MSampEn approach has limited application when the number of variables increases. Differently to the univariate sample entropy (SampEn) introduced by Richman and Moorman [5], which operates on delay vectors of a univariate time series, MSampEn calculates, for a fixed embedding dimension , the average number of composite delay vector pairs that are within a fixed threshold and repeats the same calculation for the dimension () [4]. Because the composite delay vectors are high-dimensional (the dimension is equal to the product of the number of variables and the embedding dimension of the reconstructed phase space), the probability that at least two composite delay vectors will match with respect to tolerance quickly decreases as the number of variables increases. Among the other multivariate entropy approaches described is Multivariate Principal Subspace Entropy (MPSE) [6], introduced as a measure to estimate spatio-temporal complexity of functional magnetic resonance imaging (fMRI) time series. MPSE is based on the multivariate Gaussian density assumption of the fMRI data. Multivariate singular spectrum analysis (M-SSA) [7-10] provides insight into the dynamics of the underlying system by decomposing the delay-coordinate phase space of a given multivariate time series into a set of data-adaptive orthonormal components and is a powerful tool for spatiotemporal analysis of the vector-valued processes. M-SSA combines two useful approaches of statistical analysis: (1) it determines – with the help of principal component analysis – major directions in the system’s phase space that are populated by the multivariate time series; and (2) it extracts major spectral components by means of singular spectrum analysis (SSA). However, the Shannon entropy constructed from the M-SSA eigenvalue spectrum has weak distinguishing ability and does not provide information about the degree of complexity for both temporal and spatial components of multivariate time series.

In the present paper, it is shown that high-dimensional block Toeplitz covariance matrices (on which M-SSA operates) estimation via Kronecker product expansions makes it possible to define the complexity of multivariate time series by means of the spatial and temporal components and to obtain more information about the underlying process.

2. Description of the algorithm

Let be a multivariate time series with components (variables) of length N. In practical implementation (neuroimagery with EEG, geosciences), this denotes samples of a space–time random process defined over a -grid of space samples and an -grid of time samples. It is assumed that each component has been centred and normalized. Each time series component of the multivariate system can be reconstructed in -dimensional phase space by selecting the embedding dimension and time delay . Each phase point in the phase space is thus defined by [11]:

where , and denotes the transpose of a real matrix. At the reconstructed phase space matrix with rows and columns (called a trajectory matrix) is defined by:

and encompasses delayed versions of each component. The total trajectory matrix of the multivariate system will be a concatenation of the component trajectory matrices computed for each component, that is, , and has rows of length . The classical M-SSA algorithm [8, 9] then computes the covariance matrix of and its eigendecomposition. The total trajectory matrix can be rearranged such that the delayed versions of all components follow one another, that is, . In this case, and for , the covariance matrix takes the block Toeplitz form:

with equal block submatrices along each (top left to lower right) diagonal. The covariance matrix combines all auto- as well as cross-covariances up to a time lag equal to . The spatio-temporal covariance matrix can be represented as a Kronecker product (KP) [12-16]:

for some separation rank some sequence of and The KP parametrization assumes that an arbitrary spatio-temporal correlation can be modelled as a product of a spatial and a temporal factor. These two factors are independent of each other; hence, the spatial and temporal correlations are separated from each other in the KP model. The spatial covariance matrix captures the spatial correlations between the components of multivariate time series (in real-world application, signals registered at different sensors) and the temporal covariance matrix captures the temporal correlations as lag dependent. The meaning of Eq. (4) is that the temporal covariance matrix is fixed in space and the spatial covariance matrix does not vary over time. In other words, space and time are not correlated. In order to effective detect the structure of the spatio-temporal dependencies in high-dimensional multivariate systems, we use the full rank approximation model, i.e., define . The solution to the nearest Kronecker product for a space-time covariance matrix (NKPST) problem is given by the singular value decomposition (SVD) of a permuted version of the space-time covariance matrix in the following:

1) The matrix is rearranged into another matrix such that the sum of squares that arise in is exactly the same as the sum of squares that arise in , where denotes the vectorized form of the square matrix (concatenation of columns of into a column vector). The rows of are defined as the transpose of the vectorized × block submatrices of , where each block submatrix is , , . That is, the permutation operator is defined by setting the th row of equal to , , .

2) The SVD is performed on the matrix :

and the matrices and are defined for the selected Kronecker product (full rank approximation) model by and , where and are left and right singular vectors corresponding to the singular value [13, 16]. This is converted back to a square and the matrix by applying the inverse permutation operator.

Finally, the SVD is performed on the estimated matrices and , and the spatial and temporal components of the multivariate entropy (MVE) can be defined as the Shannon entropy of the corresponding normalized eigenvalue spectrum:

where and are the summarized and normalized eigenvalues of the decomposed matrices and respectively. The Shannon entropy quantifies the degree of uniformity in the distribution of the eigenvalues: it tends to unity at uniform distribution of the eigenvalues and tends to zero if the eigenvalue spectrum is concentrated into a single first largest eigenvalue. The entropy features and can be expressed in complex form , and the multivariate spatio-temporal entropy can be defined by modulus and angle of this complex quantity. The angle reflects the difference between and and ranges from 0 (at ) to (at ).

3. Simulation results

To validate the ability of the proposed algorithm to reveal both within- and cross-component properties in multivariate data, we generated a multivariate time series with defined changes of the complexity. The overembedding (16) for all trials was chosen in order to capture the temporal properties of the time series. All data processing and analyses were performed using Matlab software (MathWorks, Natick, MA).

First, to illustrate the ability of the proposed algorithm to model cross-component properties in multivariate data, we considered a multivariate deterministic system – a chain of diffusively coupled Rössler oscillators [9, 17]:

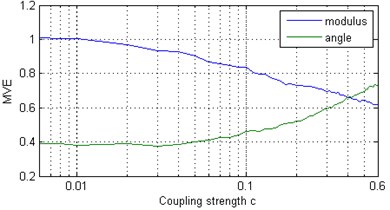

The position in the chain is given by the index ; are the associated natural frequencies, with , and we assume that free boundary conditions and exist. The frequency mismatch between oscillators and is given by . The parameter , and is the coupling strength. We considered a chain of Rössler oscillators and generated a time series using the ODE45 integrator of Matlab. The solution was sampled at time intervals 0.1, and the proposed algorithm was applied to -components of length 2048 of the Rössler systems. Fig. 1(a) shows the and curves when the coupling strength is varied. As increases, the oscillators form synchronous clusters within which the observed frequency is the same [9]. With a further increase of the number of clusters decreases as clusters merge, and at sufficiently high c all oscillators are synchronized, i.e., all the mean frequencies coincide, although the amplitudes of the oscillators remain chaotic [17]. MVE is characterized by two components – modulus and angle – that provide comprehensive information about the inherent structure of the multivariate data. The increasing value of indicates that decreases due to the growing strength of dependence between components of a multivariate time series.

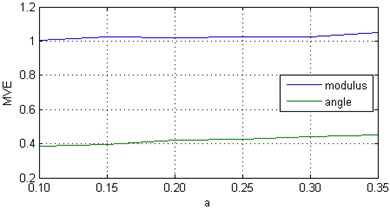

To illustrate the ability of the proposed algorithm to model within-component properties in multivariate data, we considered a chain of above-mentioned Rössler oscillators at and gradually increased the parameter from 0.10 to 0.35. The larger , the more complex the resulting time series will be. The results are displayed in Fig. 1(b). In this case, the increased value of indicates that slightly increases because of the degree of randomness of a time series.

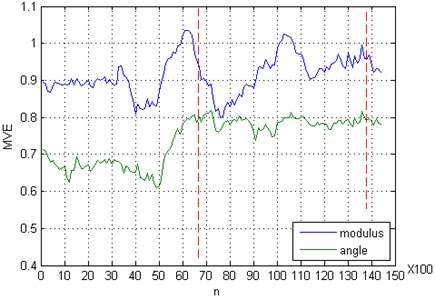

To demonstrate the performance of the proposed algorithm for quantifying changes in the dynamics underlying the experimental data set, we have applied the algorithm to multi-channel EEG recordings from epilepsy patients. It is well known that during epileptic seizures EEG time series display a marked change of the dynamics (mainly oscillations of comparatively large amplitude and well defined frequency). The EEG data with the clinically marked start and end of seizure are taken from the CHB-MIT Scalp EEG Database (http://www.physionet.org/physiobank/database/chbmit/). We analyzed 23 contacts of one-minute raw (unfiltered) scalp EEG recordings (from record, numbered chb01/chb01_04.edf) using the block Toeplitz covariance matrix, which was constructed over a moving window of length 3.9 s, corresponding to 1000 data points. This window was shifted with 90 % overlap over the whole EEG recording, and the time evolution of the and was computed. As we can see in Fig. 2, both and indicate sudden changes of the brain dynamics in preictal and ictal states. Furthermore, the angle of the multivariate entropy provides essential information about factors that cause this complicated dynamics. The considerable positive deviation of from its mean value denotes that changes due to the growing strength of dependence between components of a multivariate time series, and also because of the degree of randomness of a time series.

Fig. 1Multivariate entropy (modulus EΣ and angle φE) for: a) a chain of coupled Rössler oscillators (x components) at various coupling strengths c; b) a chain of Rössler oscillators (x components) at c=0 and various values of parameter α

a)

b)

Fig. 2Multivariate entropy (modulus EΣ and angle φE) applied on epileptic EEG. The period between two vertical dashed lines is visually identified by the neurologists as the seizure period

4. Conclusion

A simulation involving a multivariate deterministic and stochastic time series shows that the approach based on a full rank Kronecker product expansion of the block-Toeplitz covariance matrix, calculated from the full augmented trajectory matrix, can reveal temporal dynamics and relationship between the signals; furthermore, it is a suitable tool for the assessment of the underlying dynamic complexity of multivariate observations. The proposed approach defined in terms of modulus and angle of the complex quantity constructed from the spatial and temporal components of the multivariate entropy reflects both the integrative properties of the systems and the ratio between the complexities in the spatial and temporal directions. The algorithm can be applied to the estimation of the complexity of multivariate time series with a large number (tens) of variates (e.g. EEG), when the application of the other approaches becomes problematic.

References

-

Seely A. J. E., Maclem P. T. Complex systems and the technology of variability analysis. Critical Care, Vol. 8, 2004, p. 367-384.

-

Lehnertz K., Ansmanna G., Bialonski S., Dickten H., Geier Ch., Porz S. Evolving networks in the human epileptic brain. Physica D, Vol. 267, 2014, p. 7-15.

-

Bollt E. M., Skufca J. D., McGregor S. J. Control entropy: a complexity measure for nonstationary signals. Mathematical Biosciences and Engineering, Vol. 6, Issue 1, 2009, p. 1-25.

-

Ahmed M. U., Mandic D. P. Multivariate multiscale entropy: a tool for complexity analysis of multichannel data. Physical Review E, Vol. 84, 2011, p. 061918.

-

Richman J. S., Moorman J. R. Physiological time-series analysis using approximate entropy and sample entropy. American Journal of Physiology Heart and Circulatory Physiology, Vol. 278, 2000, p. H2039-H2049.

-

Schütze H., Martinetz T., Anders S., Mamlouk A. M. A multivariate approach to estimate complexity of FMRI time series. Lecture Notes in Computer Science, Vol. 7553, 2012, p. 540-547.

-

Plaut G., Vautard R. Spells of low-frequency oscillations and weather regimes in the northern hemisphere. Journal of the Atmospheric Sciences, Vol. 51, Issue 2, 1994, p. 210-236.

-

Ghil M., Allen M. R., Dettinger M. D., Ide K., Kondrashov D., Mann M. E., et al. Advanced spectral methods for climatic time series. Reviews of Geophysics, Vol. 40, 2002, p. 1-41.

-

Groth A., Ghil M. Multivariate singular spectrum analysis and the road to phase synchronization. Physical Review E, Vol. 84, 2011, p. 036206.

-

Golyandina N., Korobeynikov A., Shlemov A., Usevich K. Multivariate and 2D extensions of singular spectrum analysis with the Rssa package. arXiv:1309.5050, 2013, p. 1-74.

-

Kantz H., Schreiber T. Nonlinear Time Series Analysis. Cambridge University Press, Cambridge, 2003.

-

Loan C. V., Pitsianis N. Approximation with Kronecker Products, in Linear Algebra for Large Scale and Real Time Applications. Kluwer Publications, Amsterdam, 1993, p. 293-314.

-

Genton M. G. Separable approximations of space-time covariance matrices. Environmetrics, Vol. 18, 2007, p. 681-695.

-

Tsiligkaridis T., Hero A. O. Covariance estimation in high dimensions via Kronecker product expansions. IEEE Transactions on Signal Processing, Vol. 61, Issue 21, 2013, p. 5347-5360.

-

Wirfält P., Jansson M. On Kronecker and linearly structured covariance matrix estimation. IEEE Transactions on Signal Processing, Vol. 62, Issue 6, 2014, p. 1536-1547.

-

Bijma F., de Munck J. C., Heethaar R. M. The spatiotemporal MEG covariance matrix modeled as a sum of Kronecker products. NeuroImage, Vol. 27, 2005, p. 402-415.

-

Osipov G. V., Pikovsky A. S., Rosenblum M. G., Kurths J. Phase synchronization effects in a lattice of nonidentical Roessler oscillators. Physical Review E, Vol. 55, 1997, p. 2353-2361.