Abstract

The composition, operating principle and image processing algorithm of automatic recognition system for code of wheel hub model based on optical character recognition (OCR)are introduced in this paper. In addition, characteristics of character information about wheel hub model are analyzed, algorithm of OCR based on gray-scale template matching is expounded and thus wheel hub model can be effectively identified. The system can meet requirements of mass production and online quick sorting for wheel hub production line, which can overcome disadvantages of conventional manual sorting, improve productivity and alleviate labor intensity.

1. Introduction

With the improvement of production technologies for modern industry, an increasing number of enterprises adopt large-scale automatic production line to carry out industrial production. Various product inspections, production monitoring and recognition of parts are involved in production with production line. In early-stage production with production line, manual recognition is generally adopted, which has many disadvantages, such as that working environment is not suitable for long-time work and it is inappropriate to measure precision components in a direct way. In addition, workers tend to have a negative attitude toward production due to long-term and repeated work, which may affect result of recognition.

Wheel hub is a crucial auto part and it is closely related to the safety and comfort of an automobile. During production process, different detection equipments, manufacturing routes, machine tools and cutters should be used to manufacture and test different wheel hub models on a production line. For the purpose of realizing mixed-model production with an automatic production line, wheel hub models should be identified, which is mainly conducted through manual recognition. Such method is ineffective and thus cannot meet requirements of modern industrial production line. Therefore, a rapid and accurate detection method is an urgent need [1]. The system researched in this paper is an automatic recognition system for code of wheel hub model based on OCR technology, which can identify the character information on various models of wheel hubs manufactured on a production line and realize production statistics and sorting in an automatic way. Its advantages include visual automatic recognition and online recognition, which are characterized by high accuracy and rapid processing.

2. Composition of system

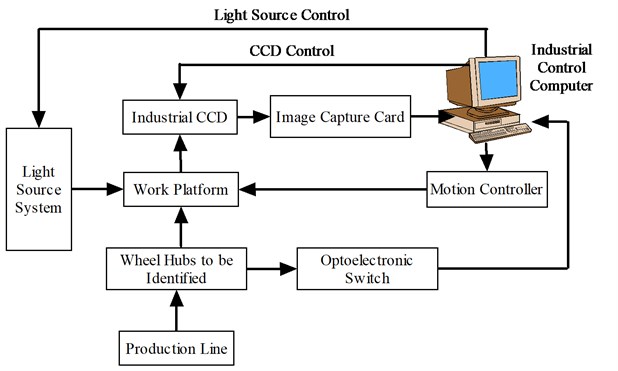

Automatic recognition system for code of wheel hub model based on OCR is mainly composed of industrial control computer, image capture card, industrial CCD, light source system, optoelectronic switch, motion controller, work platform and display. Block diagram for system structure is indicated in Fig. 1.

Fig. 1Block diagram for system structure

2.1. Preconditions for application of recognition system and its operating principle

(1) Characters on wheel hubs engraved by their producers should be standardized and unified;

(2) Character information engraved on wheel hubs should be of a fixed length.

When the above conditions can be met, rate and speed of recognition will be improved to a large extent.

Operating principle of the system: When a wheel hub conveyed by a production line is passing through an optoelectronic switch, a signal should be triggered and transmitted to an industrial control computer. After receiving such signal, the computer will control the work platform to rotate and position character information on the wheel hub, and then industrial CCD will be enabled to draw up character recognition range and identify characters and thus generate a code for the wheel hub model.

2.2. Capture and preprocessing of image of characters on wheel hub

During the process of capturing image of characters on a wheel hub, it is unavoidable that the process will be influenced by various factors such as change of ambient light, dust, seal coat of wheel hubs and vibration of equipment. There may exist some noises in an image, but background and lighting are main factors influencing the quality of imaging. LED light source should be used when the system is capturing images, which can eliminate the influence generated by uneven lighting and background interference [2].

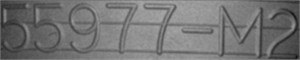

Image of characters on a wheel hub is generally at a fixed position, therefore such image is always in a relatively stable area, which is the interested image area for character recognition. Through shielding image area which contains no character, sensitive character image area can be captured and processed, which can narrow down search area for character information of a wheel hub and improve the speed and efficiency of image processing. In the image of characters on a wheel hub, character information should be comprised of 7 characters. The system can realize the preprocessing of character image through steps including smooth filtering and noise deduction. The original image of characters on a wheel hub model (namely 55977-M2) is indicated in Fig. 2(a), while Fig. 2(b) is the image after smooth filtering.

Fig. 2Image of characters on wheel hub model (55977-M2)

a) The original image of characters on wheel hub

b) Image after smooth filtering

2.3. Threshold segmentation for image of characters on wheel hub

An analysis on image of characters on a wheel hub indicates the following characteristics:

(1) Image of characters on a wheel hub is composed of figures and letters;

(2) There is no big difference between grayscale of image of characters on a wheel hub and that of the background, so grayscale threshold for segmentation should be smaller in order to reserve character information in an image.

The processing based on the above characteristics is as follows:

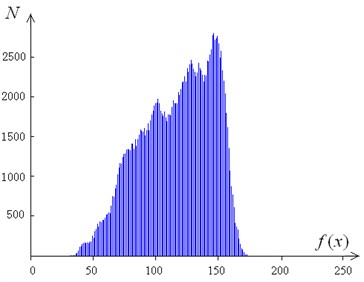

(1) Grayscale histogram of image of characters on a wheel hub should be analyzed to determine the best grayscale threshold for image segmentation [3].

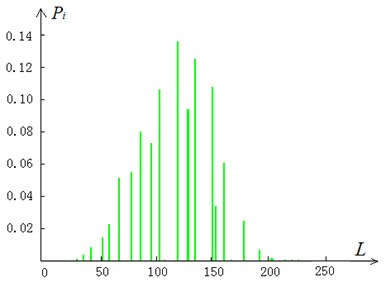

Histogram for grayscale of wheel hub model (55977-M2) is indicated in Fig. 3., in which X-axis represents grayscale value of pixels (f(x)), while Y-axis represents the number of pixels which have a corresponding grayscale (N). There is no big difference between the grayscale value of image of characters on a wheel hub and that of the background, so the double peak value existing in the histogram is not very obvious and grayscale distribution is relatively concentrated. Therefore, the result of image segmentation through double-peak method will not be very good, and histogram threshold method should be used to determine the best threshold value and thus image segmentation can be realized. There is no big difference between the grayscale of foreground and background of an image, so the principle for image segmentation should be reserving character information in an image to the largest extent and further smoothing background information of the image.

(2) The principle for determining the best threshold value for image segmentation through histogram threshold method.

Given that grayscale of the original gray image (f(x)) is L, number of all pixels in the image is M×N, number of pixels with their grayscale being i is ni and statistical probability of pixels with their grayscale being i is Pi, as indicated in Eq. (1):

Based on the corresponding grayscale value (t) for probability of occurrence (Pj) of the given grayscale in the system, t should be used as the best threshold value for segmenting image of characters on a wheel hub.

After the best threshold value (t) is determined, an image should be segmented, and its background noises should be further eliminated. The algorithm applied is indicated in Eq. (2):

In the equation, f(x,y) is grayscale of pixels of the original image, g(x,y) is grayscale of pixels of the segmented image and t is the best threshold value for image segmentation.

In Eq. (2), grayscale of pixels in a character image with their grayscale value being greater than threshold value (t) should stay unchanged, and grayscale value of those pixels with their grayscale being less than the best threshold value (t) should be set as 0. Pixels with their grayscale value being greater than this threshold value are mainly information on character image of a wheel hub, while pixels with their grayscale value being less than this threshold value are mainly the background and noises.

Fig. 4. is the statistical probability graph for grayscale of image of characters on a wheel hub model (55977-M2) and corresponding probability is 0.02. Fig. 5. is the image segmented with histogram threshold method, and the difference between Fig. 2(b) and Fig. 5.

Image in Fig. 5. looks brighter and contains less background noises. However, the loop line left in the casting process of semi-finished wheel hub still exists, which doesn’t affect further recognition.

Fig. 3Histogram of grayscale

Fig. 4Statistical probability of grayscale of characters on wheel hub model (55977-M2)

Fig. 5Result of image segmentation for wheel hub model (55977-M2) with histogram threshold method

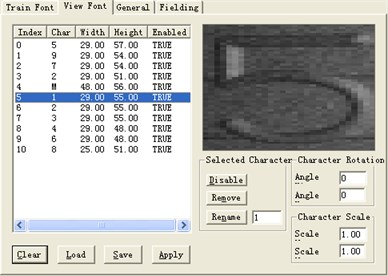

2.4. Production of character templates

The system selects images of characters on wheel hub models which are pre-processed and have few noises in order to extract character templates. The system applies shape of seven-segment framework projection to establish a character template library and such shape is similar to the shape of a seven-segment LED display. Method for projection is to project any nearest side and finally number of projection points for each side should be counted and thus seven numbers come up. The ratio of each number among the seven numbers to the total number of character points should be calculated respectively and then normalized [1, 2, 4-6]. When character templates are produced with this method, the system should record the size of pixels of character templates.

Method for normalization is as follows: If the ratio is less than 0.08, it should be recorded as 0; if it is greater than 0.08 and less than 0.15, it should be recorded as 1; if it is greater than 0.15, then it should be recorded as 2. And seven characteristic values should be adopted.

Interface of the control software for producing character templates with the above method is indicated in Fig. 6. The same method should be used to produce character templates for figures (from 0 to 9) and letters (from A to W).

2.5. Template matching recognition based on characteristics

Template matching is one of the most classic and representative methods for image recognition. Image matching algorithms can be mainly divided into two categories, in which one category is matching based on grayscale correlation and the other one is matching based on characteristics [7, 8], and the system adopts the second one.

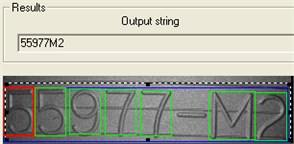

Based on the above principle, characteristic vector of grayscale should be extracted and compared to that of characters in the template library, through which characters similar to a certain template in the aspect of characteristics can be put together and minimum distance method should be used to identify characters [3, 4, 7, 8].

Given that the number of identifiable categories is m and sample vectors in various categories (ω) are Z1, Z2, ..., ZM, then the distance between characteristic vector (X) and each sample can be defined through Eq. (3):

Through calculation, characteristic vector (X) to be identified can be determined as falling into the category of grayscale characteristic (ωk) represented by characteristic vector (Zk), which is the most similar one to characteristic vector (X), as indicated in Eq. (4):

X∈¯ωk.

The result of identifying image of characters on a wheel hub model (55977-M2) through the above method is indicated in Fig. 7.

Fig. 6Production of character template

Fig. 7Result of recognition

3. Conclusions

Algorithm of recognition through gray-scale template matching for characters on wheel hubs has been successfully applied in a company’s automatic recognition system for code of wheel hub model, which effectively realizes the automatic recognition of code of wheel hub model. Multiple models of wheel hubs (200-wheel hubs for each model) are involved in the test and a comprehensive performance test for the recognition system is carried out. Testing environment: CPU: I5-2500 3.3G; internal memory: DDR III 4G.

Table 1Test result

No. | Wheel hub model | Total amount of wheel hubs | Number of accurately identified wheel hubs | Recognition rate | Average similarity of characters | Time for recognition |

1 | 970775 | 200 | 194 | 97 % | 86.2 % | 16.5 ms |

2 | 37767 | 200 | 195 | 97.5 % | 89.2 % | 15.6 ms |

3 | 961665 | 200 | 193 | 96.5 % | 85.4 % | 18.8 ms |

4 | 80677 | 200 | 195 | 97.5 % | 86.3 % | 13.2 ms |

5 | 801775 | 200 | 192 | 96 % | 87.2 % | 14.9 ms |

6 | 67978 | 200 | 195 | 97.5 % | 92.3 % | 15.6 ms |

7 | 70077 | 200 | 194 | 97 % | 93.5 % | 14.5 ms |

8 | 70566 | 200 | 193 | 96.5 % | 89.8 % | 15.2 ms |

Through analysis on data in Table 1, the following conclusions can be reached:

1) Character recognition for the original image of characters on a wheel hub can only be conducted after smooth filtering and threshold segmentation. There is no big difference between grayscale of character information of an image and the background, so the corresponding grayscale for probability of occurrence (0.02) should be selected as the threshold value for image segmentation; Database of character templates for wheel hubs include only character templates of figures (from 0 to 9) and letters (from A to W) and the number of character templates are fixed. The system doesn’t have the problem caused by large number of wheel hub models and long time for loading templates, so speed of recognition is very fast;

2) Change of ambient light has a relatively large impact on recognition rate of the system, so LED light source should be applied in the system, which can generate even illumination. In addition, the system should be installed in a closed control cabinet, which has only the channel for production line passing through; Recognition rate of the system is above 96 %, which proves the effectiveness of algorithm used in recognition of code of wheel hub model that is realized by automatic recognition system for code of wheel hub model based on OCR.

3) In the test, the lowest similarity of characters set in the system is 70 %. If similarity of characters can be further lowered, the system will represent a higher recognition rate;

4) Technology of visual recognition by computer is applied in the system, which is characterized by some advantages such as non-contact, online real-time feature, high speed and anti-interference capacity on site, and thus it can realize the classification of different models of wheel hubs produced on a production line.

References

-

Zhao Yuliang, Liu Weijun, Liu Yongxian Research on online identification system for auto wheel hubs. Machinery Design and Manufacture, Vol. 10, 2007, p. 164-165.

-

Yang Guang, Feng Tao, Qin Yongzuo Research on improvement of image processing algorithm for automatic identification system for code of wheel hub model. Nuclear Electronics and Detection Technology, Vol. 6, 2012, p. 732-735.

-

Otsu Threshold N. A. Selection method from gray-level histogram. IEEE Transactions on System Man Cybernetics, Vol. 9, Issue 1, 1979, p. 62-66.

-

Gao Tong, Jiang Hua, Lv Min Method of identification for hand-written characters based on template matching. Journal of Harbin Institute of Technology, Vol. 1, 1999, p. 105-106.

-

Duan Yuanyuan Automatic Identification System for Wheel Hub Models of Automobiles. Master’s Thesis, Changchun University of Science and Technology, 2009.

-

Wan Jin’e, Yuan Baoshe, Gu Zhao, Sabit Mirsali Correlation matching recognition algorithm based on character normalized double projection. Journal of Computer Applications, Vol. 33, Issue 3, 2013, p. 645-647.

-

Liu Ying, Cao Jianzhong, Xu Zhaohui, et al. Improvement of image matching algorithm based on grayscale correlation. Journal of Applied Optics, Vol. 9, 2007, p. 536-539.

-

Ataman E., Aatre V. K., Wong K. M. A fast method for real-time median filtering. IEEE Transactions on Acoustics, Speech and Signal Processing, Vol. 28, Issue 4, 1980, p. 415-421.

About this article

This work was supported by Jilin Provincial Science and Technology Program (No. 20180201090GX), CITIC Dicastal Co., Ltd., and the equipment has been used in practice.