Abstract

The paper proposes a method for constructing models of low level for two-phase flow based on a combination of finite-difference solutions and detailed spatial approximation, providing the possibility of approximating the models for solving optimal control the operating parameters of the oil reservoir.

1. Introduction

The choice of numerical methods for solving optimization problems determined by the type tasks. For a simple class of optimization problems with linear objective function and linear constraints (linear programming) developed methods to find an optimal solution (or set the insolubility of the problem) in a finite number of calculation steps. Introduction conditions discrete variables complicates finding solutions. Arbitrary views of objective functions and constraints limiting the capacity of existing computational optimization methods. The biggest challenge for solving optimization problems is the presence of several extremes. Upon receipt of the decision, without any additional studies of the behavior of the objective function, it is impossible to establish unequivocally, it has converged to some extremes. Therefore, global optimization is yet unsolved problem. The result of the application of classical optimization methods, such as requiring the calculation of derivatives, as well as direct methods is highly dependent on the existing initial allowable approach. In [1, 2] fairly complete and detailed concepts and modern approaches to the implementation of genetic algorithms. The analysis of the existing crossing operators, selection and mutation, and suggested some new ones. The focus is on algorithms for solving unconstrained optimization or with restrictions “corridor” type. Therefore, we will consider further development of genetic algorithm adapted for solving problems with constraints of any kind.

2. The model of the system and the optimization strategy

The use of real coding can improve the accuracy of the solutions found and increase the speed of finding the global minimum or maximum. The speed is increased due to the lack of coding and decoding processes of chromosomes in each step of the algorithm. The only transformation that it is advisable to carry out this reduction of variables to a dimensionless form in the range [0, 1]. The literature describes the following types are most common crossing operators [2-5].

BLX- operator. For crossing two individuals selected:

The value of the new gene is defined as a linear combination of . Coefficients , defined by the following relations: , , where the number of ; – random number.

B1 operator simulating mating with binary coding:

Crossover operator B2.

Just as in a binary coding, transform the real number on the interval an integer . In the case of a binary cross-breeding with the binary representation of the number randomly determined by the position of the section of the chromosome for crossing. This is followed by the exchange of selected parts of the chromosomes. If there are two numbers , , the result of interbreeding .

The result of the simulation of the operation in the real version is the expression:

Coefficients , are defined as follows:

where – a random number corresponding to the position of crossing; , ; – random number and .

Operators crossing B1, B2, BLX are probabilistic mechanism by random choice of u. The operators Bin1, Bin2 often crossing occurs in the lower ranks of numbers. The operator BLX crossing occurs uniformly in the range of the real numbers. For all operators crossing condition .

As used random mutation operator mutation in which , subject to change, it takes a random value in the range of its changes , .

Besides mutation operator applied inversion operator that for real code has the form:

The sequence of operations in a real coding algorithm is the same as the standard binary encoding genetic algorithm. The difference lies in the form of genetic operators. Before starting the process at 0 is formed by a population consisting of individuals of m. An individual or chromosome is represented as:

where , – vector function arguments; – transformation, the transition from the vector (phenotype) to the encoded representation (genotype).

The transformation is carried out by bringing the argument to the dimensionless form:

Inverse transformation:

Held on the evolution of the population iteration . The selection of individuals for crossbreeding carried out by the tournament: two randomly selected individuals and individuals with the best quality involved for mating with a given probability of crossing.

One of the ways to improve the work of optimization techniques is the use of hybrid algorithms that combine the properties of gradient and evolutionary algorithms. Usually, they find the initial approximation, localized in extremum, using a genetic algorithm, and then specify the position of the extremum of the gradient method. In that case also accelerates convergence, but the extremum is not necessarily global.

In [1, 6], a hybrid algorithm based on parallel work of genetic operators and any additional gradient or direct method. leader - in population created by the genetic algorithm, the best individual is selected. This leader is trained separately for an additional method. If it is thus a qualitative indication of better than all other individuals in the population, then it is introduced into a population and is involved in the reproduction of progeny. If there is individual in the population resulting from evolution to be the best indicator, it becomes the leader.

As an additional method shows good efficacy conjugate gradient method (the Fletcher-Reeves) (CGM). In the method of Fletcher-Reeves search direction extremum is conjugated. The system of linearly independent directions , is said to be conjugate with respect to the positive definite matrix , if , , , . It is necessary to construct a sequence of conjugate directions , , are linear combinations and previous destinations , . The starting direction is taken . Direction selected conjugate towards , so that and . Coefficient For direction we have . Having built a sequence of search directions we get the following algorithm: 1) 0, at point determined ; 2) , determined , . Compute , and direction . When replaced to and the iterative process is cyclically repeated until the conditions .

Conjugate gradient method has a high rate of convergence to the optimal solution, which is close to the square. In addition, this method does not calculate the matrix and are not stored in memory, it can be used to solve optimization problems of large dimension.

The hybrid algorithm.

1) 0. Formed population consisting of m individuals for VGA or RGA method. The first issue to take special with the best result (the minimum value of the function (1)). With the conversion we obtain a vector and .

2) . Using the method of MSG is calculated as follows , vector approach . Genetic algorithm creates the next population and it is the best specimen that defines the next vector .

3) If , then .

4) If , then ..

5) , , , ; . If the stop condition is satisfied, then otherwise end on a paragraph 2.

As a further method, it is also advisable to use a genetic algorithm, but in a narrower field of search. The boundaries of the narrow search region are determined as follows. If , – the best individual in the population, the additional border search area is: , ; . Test results showed that the amount of .

To solve optimization problems and function restrictions. As a rule, the problem of conditional minimization reduced to the unconstrained minimization problem through the use of penalty functions, and then find a solution using genetic algorithms and gradient methods. When using penalty functions the problem of determining the weighting factors, and the function itself is often the nature of the ravine. Different objective functions and constraints require careful selection of factors that cannot always be done successfully, and there is a need for a new selection when changing the conditions of the original problem.

Suffice universal are two approaches, based on the selection of operations and the formation of new populations. In one approach [7] used the idea of parallel populations with acceptable and unacceptable specimens. In another [8] is also involved in illegal crossing of individuals with selections to dominate. We will apply for the numerical solution of the problem with the restrictions basic genetic algorithm, described in [6] with an additional selection, described in [8]. Genetic algorithm of [6] applies to crossing BLX operators, B1, B2 and classical operator Holland. These operators are selected at each crossing procedure at random, which expands their opportunities.

When selecting a tournament selection method is applied to the implementation of the following rules: 1. If the two compared individuals permissible, i.e. satisfying the constraints is selected from the best indicator of the objective function. 2. If one individual is permitted, and the second not, permissible selected. 3. If both individuals invalid, then choose the one with the smaller number of outstanding restrictions. 4. If both individuals are unacceptable and have the same number of violated constraints, the chosen one in which a violation of minimal restrictions.

These rules are implemented algorithmically easy and does not require significant processing of a basic genetic algorithm.

3. Computational experiments

To test the efficiency of the method, consider some examples that are used for testing optimization techniques. For all the tasks carried out to minimize the objective functions.

Objective 1.

Rosenbrock objective function:

The global minimum without restrictions: , ;

As-equality constraints used by the system of equations that define the circle of radius for each pair of adjacent variables:

As constraints-inequalities favor a system of inequalities that define the multi-dimensional ring with an inner radius and an outer:

For if is inside the ring, then the best solution is for the 400 iterations. When , a decision [1.4141-1.4143], 3414.227 is reached for 2000 iterations. When the equality constraints, defined in the form 1.999, 2.001, the same solution was obtained at a significantly greater number of iterations 32000.

Objective 2.

The task of multi-function Rastrigin:

The global minimum without restrictions: , ; .

Restrictions are the same as in the problem 1.

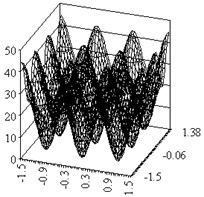

a large number of extrema of this function can be seen even on the chart for two variables.

When 0, , decision achieved by 250 iterations.

When 0.9999, 1.0001 received the decision: even numbers of variables –0.9999, odd , 49.9901 when the number of iterations 2300.

Objective 3 (Welded beam design) [8].

Mathematical record of work tasks [8].

The optimal solution, known in the literature: 0.2444; 6.2187; 8.2915; 0.2444; 2.3816.

We received: 0.244369; 6.2186069; 8.2914718; 0.244369; 2.3811304 for 1200 iterations.

Objective 4 [9] (38 restrictions).

Received for 1200 iterations:

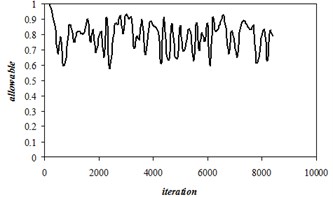

, –1.905155. Active restrictions: 1, 2, 34, 37, 38. Fig. 2 shows the proportion of the population of feasible solutions in volume 1000.

Fig. 1Rastrigin function, n=2

Fig. 2The proportion of feasible solutions

4. Conclusions

The results of solving test problems show that the proposed algorithm can handle all the tasks discussed in [8, 9] for arbitrary restrictions and objective functions. This algorithm is applied to solve further problems for the optimal operation of oil wells.

References

-

Teneev V. A., Shaura A. S., Jakimovich B. A. Structural-Parametric Optimization and Management. Izhevsk State Technical University Publishing House, Izhevsk, 2014, p. 235, (in Russian).

-

Eshelman L. J., Schaffer J. D. Real-Coded Genetic Algorithms and Interval-Schemata. Foundations of Genetic Algorithms 2. Morgan Kaufman Publishers, San Mateo, 1993, p. 187-202.

-

Herrera F., Lozano M., Verdegay J. L. Tackling real-coded genetic algorithms: operators and tools for the behavior analysis. Artificial Intelligence Review, Vol. 12, Issue 4, 1998, p. 265-319.

-

Haupt Randy L., Haupt Sue Ellen Practical Genetic Algorithms. 2nd Edition. John Wiley and Sons, Hoboken, NJ, 2004, p. 261.

-

Poli Riccardo, Langdon William B., McPhee Nicholas F. A Field Guide to Genetic Programming. Lulu Enterprises, UK, 2008, p. 235.

-

Tyulenev V. A., Jakimovich B. A. Genetic Algorithms in Modeling Systems. Izhevsk State Technical University Publishing House, Izhevsk, 2010, p. 308, (in Russian).

-

Prokhorovskaya E. V., Teneev V. A., Shaura A. S. Genetic algorithms with real-coded for solving constrained optimization problems. Intelligent Systems in Production, Vol. 2, 2008, p. 46-55, (in Russian).

-

Deb K. An efficient constraint handling method for genetic algorithms. Computer Methods in Applied Mechanics and Engineering, Vol. 186, 2000, p. 311-338.

-

Michalewicz Z. Genetic algorithms, numerical optimization, and constraints. Proceedings of the 6th International Conference on Genetic Algorithms, Morgan Kauffman, San Mateo, 1995, p. 151-158.