Abstract

This paper presents the results of a neural convolutional system for recognizing the wearing of a mask by people entering a building. The algorithm is provided with input data thanks to cameras placed in the humanoid robot COVIDguard. The data collected by the humanoid – the temperature of people entering the facility, the location of the person, the way the protective mask was applied – are stored in the cloud, which enables the application of advanced image recognition algorithms and, consequently, the tracking of people within the range of the robot’s sensory systems by the administrator and the verification of the security level in the given premises. The paper presents the architecture of the intelligent COVIDguard platform, the structure of the sensory system and the results of the neural network learning.

Highlights

- The accuracy rate of the implemented neural network algorithm, responsible for estimating whether a person entering a building has an anti-virus mask on their face, is 90%.

- In various lighting conditions, both in cloudy weather and shady areas it worked with 70% confidence.

- The use of such a solution will potentially increase the usability of the described biometric platform.

1. Introduction

During a pandemic, it is easy for infections to increase. People are forced to stay in their homes so that the virus cannot spread. However, we are all forced into situations where we have to go shopping, for example. Not everyone has the opportunity to get tested for the covid virus every day. The sanitary regimes imposed, the obligations to wear masks and to disinfect hands can be stubborn, but they are the only way to resist the virus. Until the last person has been vaccinated, it is important to look after your own health and that of others. On a daily basis, tests for the presence of the corona virus are first carried out by taking an unpleasant sample through the nose, and this sample is then tested in the laboratory. The test results are received the next day. The algorithm of the interactive, intelligent biometric platform meets these tests.

The processing of data and its subsequent interpretation by a computer is much more efficient than the tests of a single doctor. Statistics allow the summary and interpretation of a huge set of information collected by the previously mentioned platform. Using convolutional neural networks, it is possible to create an algorithm based on multiple input parameters. Convolutional networks are mainly used in the analysis of lung X-ray images [1]-[7]. Applications of fuzzy systems in predicting the spread of pandemics are also known [8]-[11]. In addition, fuzzy logic and neural networks allow the regulation of traffic in many industrial manufacturing plants [12]-[15]. However, this paper presents an intelligent biometric platform that uses artificial intelligence to supervise and search for individuals with suspected COVID infection. The platform is equipped with a number of classification and inference algorithms.

The operation of the algorithm in the intelligent biometric platform is based on convolutional neural networks. The algorithm uses biometric sensors and a thermal imaging camera to calculate whether a person is infected with the covid virus. The high-resolution camera image is used by the neural network to check whether a person walking past the camera is armed with an anti-viral mask. The checking algorithm will help reduce the risk of spreading the virus. Continuous containment of the virus will eventually result in the complete eradication of the covid-19 pandemic, we will be able to get rid of the restrictions caused by the pandemic once and for all.

2. Measurement stand

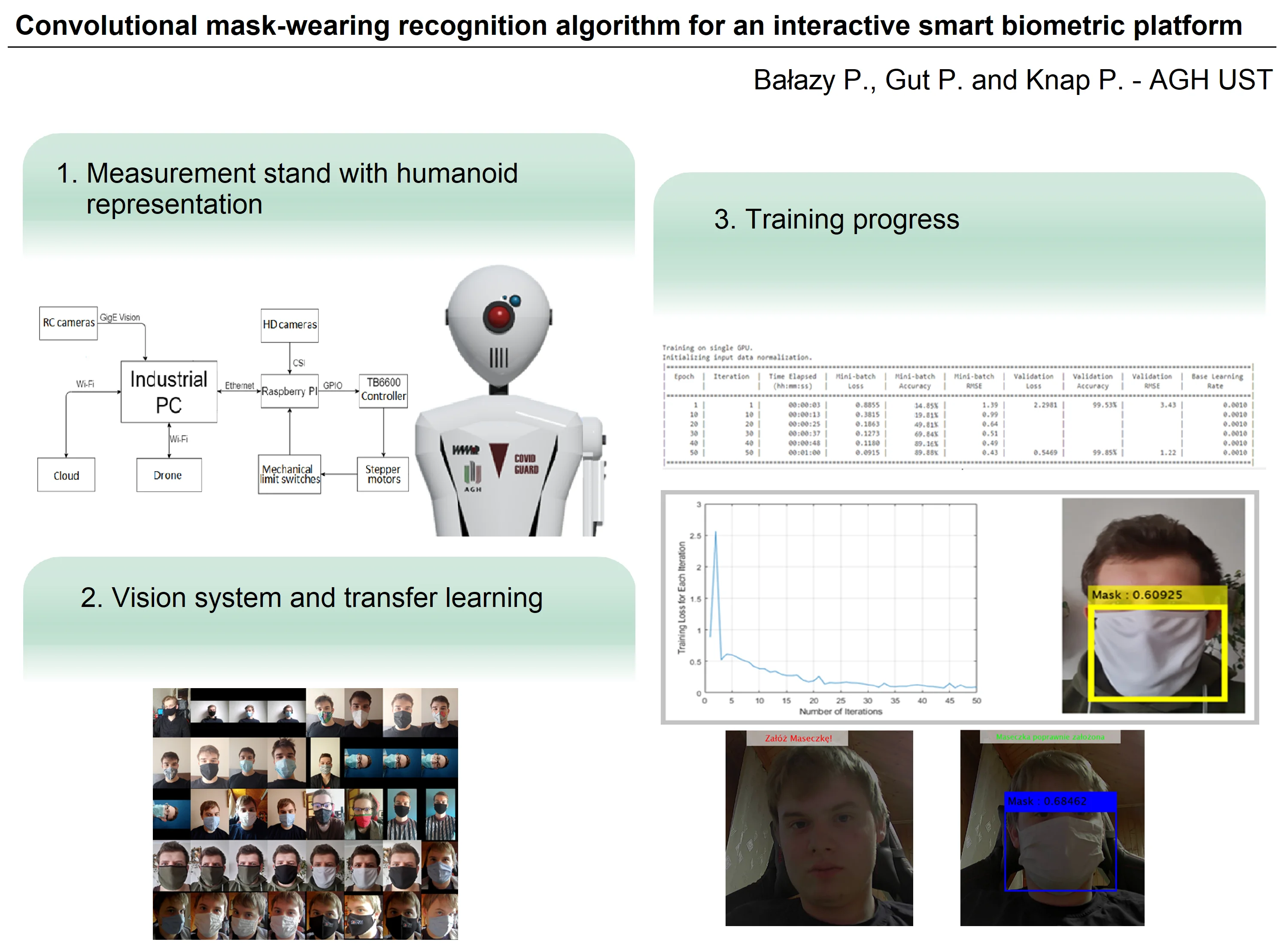

For the algorithm research, a prototype humanoid robot was created to be an intelligent watchman in a building. This prototype is the body itself equipped with a SCARA-type robotic arm (shown in Fig. 1). The humanoid is equipped with a high-resolution camera, thermal imaging and a loudspeaker used to make announcements.

Fig. 1Representation of a humanoid

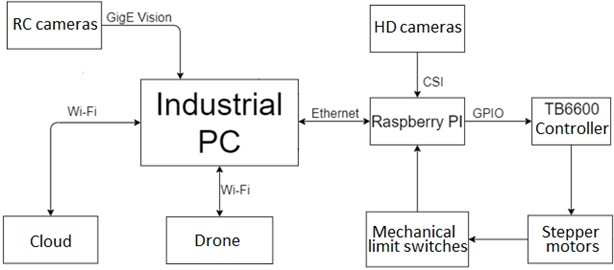

The central computing unit of the watchdog is an industrial computer together with an AI accelerator Intel Neural Compute Stick, on which the neural algorithm is implemented. The neural network was pre-learned from the collected data and uploaded directly to the device.

Fig. 2Connection diagram

The humanoid is placed stationary with the ability to move, it can move using drives placed in individual joints. The drives are controlled by a Raspberry Pi platform connected directly to the heart of the system via ethernet technology. Raspberry Pi is additionally used to operate high-resolution cameras. The humanoid’s task is to monitor people entering rooms, it is connected to a thermal camera using the GigE Vision protocol, the thermal camera is designed to test the temperature of people entering rooms. Its main task is to track people entering the room. When a person is detected not wearing a mask or with an elevated temperature, the humanoid sends out an autonomous drone. The drone has feedback from stereovision cameras and is controlled via an industrial computer. Although the algorithm requires less computing power than classical image recognition, the algorithms will be connected via the Industrial Internet of Things (IIoT) to the cloud if performance drops. The connection diagram between the system components is shown in the Fig. 2.

3. Vision system

The vision system is a high-resolution camera mounted in the head of the prototype. The camera provides a frame rate of 60 frames per second. The frame rate was reduced to 15 fps in order to reduce the processing power of the CPU. The task of the algorithm is to detect, through the camera image, the masks on the faces of passing people. The algorithm was performed with the help of a transfer learning by Single Shot Multibox Detector (SSD) model, which is used to detect various types of objects. The model was restricted to facial mask recognition, the restriction was to modify the last layers of the YOLOv2 neural network and change the learning data to images of people wearing masks. A study was conducted comparing deep learning to transfer learning. Both types of learning reflected the correct performance of the algorithm. However, building the network from scratch requires a huge amount of training data, due to the relatively small amount of data to train the network a transfer learning approach was used. By using a pre-built model and adapting it to a new data set, a task-specific network was obtained with a small amount of training data and system time. Some of the learning data (images) are shown in the Fig. 3.

Fig. 3Part of the learning data

4. Transfer learning

The transfer learning method used by the authors involves applying an already trained machine learning model to a different, but related problem. An off-the-shelf facial recognition model was used and modified to recognize anti-virus masks. The algorithm uses what it has learned previously to solve the new task, building on the previous one. A ready-made pattern was used for this task. The advantage of this method is that it saves a lot of network training time and performs better in most cases. Another advantage is the reduced need for learning data.

5. Results

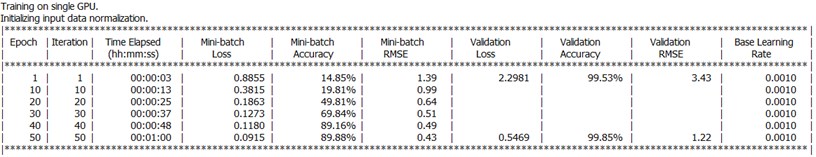

A training data set was prepared and processed, and several hundred images with correctly placed masks were included. The learning process required identifying the correct search object – the antiviral mask. A ready-made automatic labelling algorithm using a face recognition network was used. After changing the labelling layers of the neural network, the new data set was ready and then the learning started. The result of learning the network consists of many factors, the main one being the training data set – as much data as possible is desirable. The network was trained to recognise single and multi-coloured masks. The network performs much better with single-colour masks. Apart from the data, there is also a possibility to interfere with the training options, the learning rate, which controls how aggressively the algorithm changes the weights of the network. A factor smaller than the default was used because of the transfer learning method. Fig. 4 shows the results of the best network learning attempt.

Fig. 4Learning progress

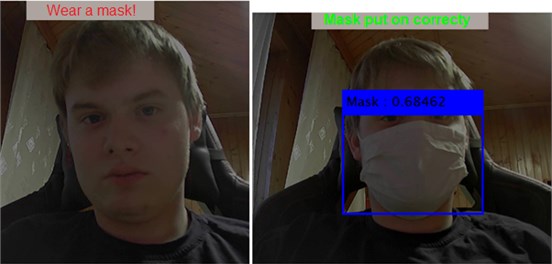

The accuracy (Mini-batch Accuracy) indicates the percentage of training images that the network has classified correctly – close to 90 % does not mean a huge success, as the loss (Mini-batch Loss) should be as close to zero as possible, which it is not. Below Fig. 5 presents the result of the analysis of one of the images from the training base by the previously trained network.

Fig. 5Learning sample results

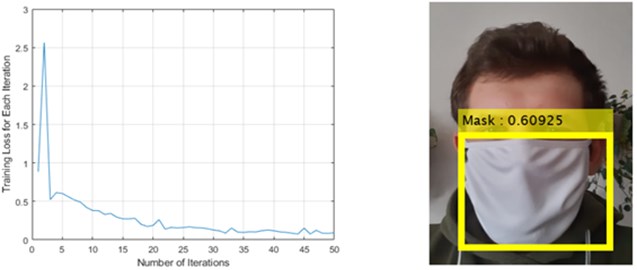

The performance of the algorithm is satisfactory, the algorithm detects masks on faces without any major problems. The algorithm detects masks even in low light shown in Fig. 6.

Fig. 6Operating with low light conditions

6. Conclusions

The accuracy rate of the implemented neural network algorithm, responsible for estimating whether a person entering a building has an anti-virus mask on their face, is 90 %. The algorithm does not make mistakes in low light conditions. It was tested in various lighting conditions, both in cloudy weather and shady areas it worked with 70 % confidence. However, the authors want to further develop the algorithm by extending it to check the temperature of the person being tested using a thermal imaging camera. After face detection, the algorithm will be tasked with checking the temperature around the person's forehead via a thermal imaging camera. The use of such a solution will potentially increase the usability of the described biometric platform. It will make it possible to determine whether the subject has an elevated temperature. This is one of the main symptoms of infection with an infectious disease, including Covid-19. This algorithm, together with the device, will be further developed and the results of the operation will be published in future papers.

References

-

T. D. Pham, “A comprehensive study on classification of COVID-19 on computed tomography with pretrained convolutional neural networks,” Scientific Reports, Vol. 10, No. 1, Dec. 2020, https://doi.org/10.1038/s41598-020-74164-z

-

S. Tiwari and A. Jain, “Convolutional capsule network for COVID‐19 detection using radiography images,” International Journal of Imaging Systems and Technology, Vol. 31, No. 2, pp. 525–539, Jun. 2021, https://doi.org/10.1002/ima.22566

-

G. Marques, D. Agarwal, and I. de La Torre Díez, “Automated medical diagnosis of COVID-19 through EfficientNet convolutional neural network,” Applied Soft Computing, Vol. 96, p. 106691, Nov. 2020, https://doi.org/10.1016/j.asoc.2020.106691

-

T. Goel, R. Murugan, S. Mirjalili, and D. K. Chakrabartty, “OptCoNet: an optimized convolutional neural network for an automatic diagnosis of COVID-19,” Applied Intelligence, Vol. 51, No. 3, pp. 1351–1366, Mar. 2021, https://doi.org/10.1007/s10489-020-01904-z

-

N. K. Chowdhury, M. M. Rahman, and M. A. Kabir, “PDCOVIDNeT: A parallel-dilated convolutional neural network architecture for detecting COVID-19 from chest X-ray images,” Health Information Science and Systems, Vol. 8, No. 1, Dec. 2020, https://doi.org/10.1007/s13755-020-00119-3

-

M. Rahimzadeh and A. Attar, “A modified deep convolutional neural network for detecting COVID-19 and pneumonia from chest X-ray images based on the concatenation of Xception and ResNet50V2,” Informatics in Medicine Unlocked, Vol. 19, p. 100360, 2020, https://doi.org/10.1016/j.imu.2020.100360

-

D. Singh, V. Kumar, and M. Kaur, “Densely connected convolutional networks-based COVID-19 screening model,” Applied Intelligence, Vol. 51, No. 5, pp. 3044–3051, May 2021, https://doi.org/10.1007/s10489-020-02149-6

-

M. K. Sharma, N. Dhiman, Vandana, and V. N. Mishra, “Mediative fuzzy logic mathematical model: A contradictory management prediction in COVID-19 pandemic,” Applied Soft Computing, Vol. 105, p. 107285, Jul. 2021, https://doi.org/10.1016/j.asoc.2021.107285

-

I. Dominik, “Type-2 fuzzy logic controller for position control of shape memory alloy wire actuator,” Journal of Intelligent Material Systems and Structures, Vol. 27, No. 14, pp. 1917–1926, Aug. 2016, https://doi.org/10.1177/1045389x15610907

-

O. Castillo and P. Melin, “Forecasting of COVID-19 time series for countries in the world based on a hybrid approach combining the fractal dimension and fuzzy logic,” Chaos, Solitons and Fractals, Vol. 140, p. 110242, Nov. 2020, https://doi.org/10.1016/j.chaos.2020.110242

-

M. Kozek, “Transfer learning algorithm in image analysis with augmented reality headset for Industry 4.0 technology,” in 2020 Mechatronics Systems and Materials (MSM), pp. 1–5, Jul. 2020, https://doi.org/10.1109/msm49833.2020.9201739

-

T. Chen and C.-W. Lin, “Smart and automation technologies for ensuring the long-term operation of a factory amid the COVID-19 pandemic: an evolving fuzzy assessment approach,” The International Journal of Advanced Manufacturing Technology, Vol. 111, No. 11-12, pp. 3545–3558, Dec. 2020, https://doi.org/10.1007/s00170-020-06097-w

-

F. Gul, W. Rahiman, and S. S. Nazli Alhady, “A comprehensive study for robot navigation techniques,” Cogent Engineering, Vol. 6, No. 1, p. 1632046, Jan. 2019, https://doi.org/10.1080/23311916.2019.1632046

-

I. Dominik, “Implementation of the Type-2 Fuzzy Controller in PLC,” Solid State Phenomena, Vol. 164, pp. 95–98, Jun. 2010, https://doi.org/10.4028/www.scientific.net/ssp.164.95

-

K. Lalik and M. Kozek, “Cubic SVM neural classification algorithm for self-excited acoustical system,” in 2020 Mechatronics Systems and Materials (MSM), pp. 1–5, Jul. 2020, https://doi.org/10.1109/msm49833.2020.9201724