Abstract

The traditional detection methods have the disadvantages of radiation exposure, high cost, and shortage of medical resources, which restrict the popularity of early screening for breast cancer. An inexpensive, accessible, and friendly way to detect is urgently needed. Infrared thermography, an emerging means to breast cancer detection, is extremely sensitive to tissue abnormalities caused by inflammation and vascular proliferation. In this work, combined with the temperature and texture features, we designed a breast cancer detection system based on smart phone with infrared camera, achieving the accuracy of 99.21 % with the k-Nearest Neighbor classifier. We compared the diagnostic results of the low resolution, originated from the phone camera, with the high resolution of the conventional infrared camera. It was found that the accuracy and sensitivity decreased slightly, but both of them were over than 98 %. The proposed breast cancer detection system not only has excellent performance but also dramatically saves the detection cost, and its prospect will be fascinating.

Highlights

- A portable smartphone-based breast cancer infrared detection system

- Combination of physiological temperature and thermal infrared image texture features

- Infrared image grayscale compression reduces the amount of calculation

- Diagnostic result close to high quality data with minimal cost

1. Introduction

Breast cancer is still notoriously known as the most common cancer disease in women and listed as the second leading cause of cancer deaths. So far, it accounts for about 30 % of all cancer cases [1, 2]. It is estimated that the number of breast cancer deaths will reach 2.5 million in 2021 [3]. The compelling way to fight this is the early detection of breast cancer, which is a highly treatable disease with a 97 % chance of survival through early diagnosis and treatment.

Mammography is acknowledged as a gold standard for early detection of breast cancer. Generally, the sensitivity of mammography in the general population is considered to be between 75 % and 90 %, with a positive predictive value of only 25 %. On the other hand, some patients might feel uncomfortable during magnetic resonance imaging (MRI) due to the use of powerful magnets and contrast agents [4]. Zemouri R. et al. studied the application of deep neural network in the diagnosis of breast cancer [5, 6]. The radiation exposure, professional technical limitations and expensive testing costs limit the popularisation of early breast cancer screening.

Infrared thermal imaging technology, an adjunct to mammography, was approved by the FDA (Food and Drug Administration) in 1982, providing a new option for early screening for breast cancer. It focuses more on physiological features than on pathological abnormalities to identify the regions of interest (ROI) defects. The incomparable advantage of thermal imaging is that the tumour tissue is often accompanied by abnormal temperature as a result of the increase in blood supply and angiogenesis. Therefore, the non-invasive, painless, and non-radiative thermal imaging technique is hugely suitable for detecting tissue abnormalities, owning a superior performance than traditional methods.

In the study of infrared image detection technology for breast cancer, Schaefer et al. manually segmented infrared thermal images to obtain the left and right breast and extracted features (e.g., the average temperature, normalized histogram, Fourier spectrum) for breast cancer pattern classification [7]. Gogoi et al. provided a study of a variety of texture features for asymmetric detection [8]. These work mentioned above show that the thermal infrared image of the breast contains the essential information of breast cancer, and it can achieve diagnoses effectively by extracting texture features. Consequently, four texture features (e.g., the contrast, inverse different moment, entropy, energy), obtained by the gray level co-occurrence matrix (GLCM) of thermal infrared image, and temperature characteristics are selected to screen the breast cancer.

Nevertheless, the existing detection method of breast cancer often uses an infrared camera to acquire infrared image, transmits the picture to a computer through a data line or memory card, and performs the program developed by the computer to diagnose breast cancer offline. This detection method has the following problems: 1) The infrared camera is too high. At present, the price of infrared cameras with pixel levels around 680×480 is generally between more than 15,000 and 30,000 dollars. The high price will make it challenging to use broadly. 2) The method of offline analysis and detection improves the threshold for ordinary people to use the infrared camera for breast cancer detection. 3) The detection device has poor portability; 4) If multiple people share the infrared camera and the detection program, the convenience and privacy of the detection device will be reduced.

The popularization of early screening for breast cancer requires an inexpensive, accessible, and friendly means of detection. There have been some applications of smart phone camera technology in telemedicine, such as cervical cancer screening, melanoma testing. Preliminary research shows that it is feasible to use a smart phone to collect digital images for clinical diagnosis, with reasonable diagnostic accuracy. Given the extensive application of smartphones and the development of infrared camera technology, in this paper, we designed a set of breast cancer detection system based on smartphone with infrared camera. Users can complete cancer detection by merely purchasing an infrared phone camera, the cost of 300-500 dollars, and downloading the installation detection application. The system can contribute to the convenience of screening, the portability of the monitoring device, and the real-time performance of the detection and analysis, thereby providing a boost for the large-scale promotion and application of the detection device.

In conclusion, the purpose of the method that a portable detection system for breast cancer based on smartphone with infrared camera is to provide accurate predictions and guide patients to appropriate treatment. Our proposal is an inexpensive, painless and accessible screening tool, which will effectively expand the path of diagnosis and treatment of breast cancer and other diseases, significantly improving the backwardness of early screening for breast cancer, and contribute to human health.

The outline of our paper is as follows. Section 2 introduces the breast cancer detection methods based on smartphone with infrared camera in details. Section 3 describes the specific acquisition protocols for the data and the test results on the data set. Finally, the conclusions are drawn in Section 4.

2. Methodology

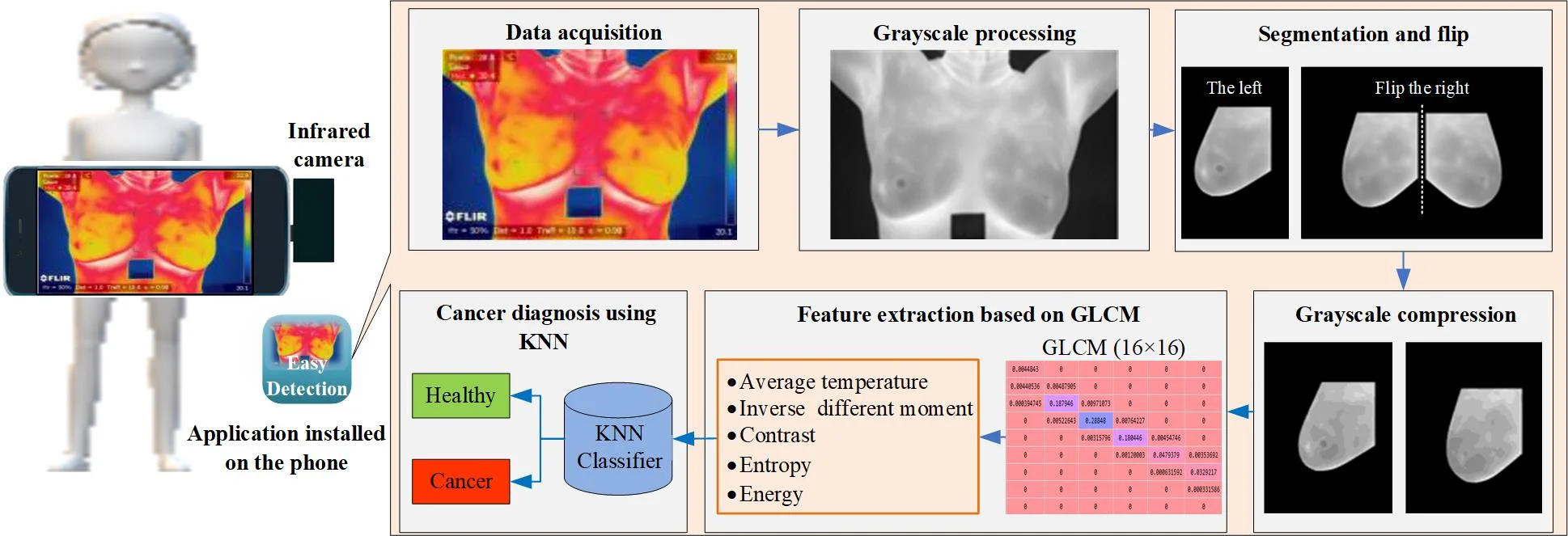

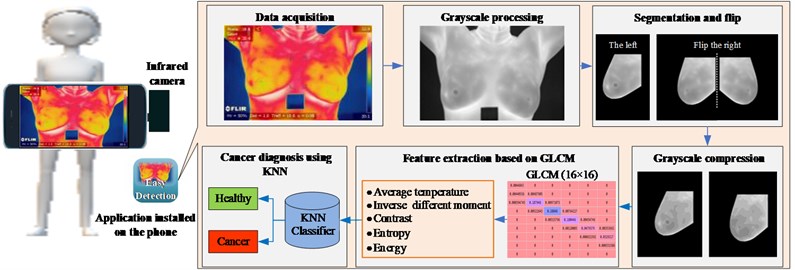

The proposed portable detection system for breast cancer is depicted in Fig. 1, which consists of an infrared camera connected with a smartphone and an installed application. The app mainly includes the following modules: data acquisition, grayscale processing, segmentation and flip, grayscale compression, feature extraction based on GLCM, and cancer diagnosis using KNN. First of all, in the data acquisition module, the subject's chest is photographed hurtlessly using a smartphone with an infrared camera to obtain a thermal infrared image of the breast. The grayscale processing module converts the thermal infrared image into grayscale, and then the segmentation and flip module cuts out the ROI of the left and right breasts from the image. At the same time, the right one is flipped to the symmetrical position in consideration of the variation between the left and right breasts. While extracting the GLCM, too many gray levels will generate a high-order GLCM, which causes the feature calculation hard. Hence, the gray level of the original picture is reduced to 16 by grayscale compression module. In other words, there are only 16 different gray levels, which better meets the computing power of smartphones and reduces the time spent. Next, the feature extraction based on GLCM module acquires the average temperature of the ROI belonging to the physiological features, as well as the texture parameters from the GLCM. Lastly, the breast cancer diagnosis using KNN module trains the classifier to detect breast cancer and obtain the label.

Fig. 1Breast cancer detection process

2.1. Gray level co-occurrence matrix

The gray level co-occurrence matrix, recommended by R. Haralick et al. [9], is a gray distribution histogram used to describe the spatial distribution of images gray scales and a powerful tool for analysing image texture features. For pixel points and with a defined positional relationship in the picture, where and represents the distance in horizontal and vertical, respectively, and both set to 1 in this paper. Next, the combination can be obtained based on their gray value and . The GLCM is denoted as , in which the element is the frequency the gray value combination appears. Since the image has multiple gray values, the mention of GLCM will be too high to calculation. To improve the speed of data processing and better adapt to the smartphone, we compress the gray level to 16 to obtain a lower-dimensional GLCM.

2.2. Feature extraction

The temperature parameters of the image are saved in the form of a matrix during the image acquisition. We select the average temperature of the ROI, which is sensitive to tissue heat abnormalities caused by inflammation, vascular proliferation or other causes. It is described as Eq. (1):

where represents the temperature matrix corresponding to ROI.

With the gray level co-occurrence matrix , some texture features can be constructed conveniently. The contrast, a measure of the extent of local variations in the image, reflects the sharpness of the picture. It is defined as Eq. (2):

The angular second moment (ASM) is the sum of the squares of the values of the GLCM. It reflects the uniformity of the image gray distribution and the texture thickness, showing as Eq. (3):

Entropy, the regularity of the elements of the GLCM. The more ordered the entropy of the gray distribution, the higher the disordered entropy. It represents the degree of non-uniformity or complexity of the texture in the image, showing as Eq. (4):

Inverse Difference Moment reflects the local variation of the image texture, showing as Eq. (5). If the different regions of the image texture are more uniform, the change is slow, and the inverse variance will be more considerable, and vice versa:

2.3. The -nearest neighbor classifier

The -Nearest Neighbor (KNN) algorithm is an excellent data mining classification method [10]. The core idea is to calculate the most similar samples (the nearest neighbour) with the sample in the feature space by similarity. The sample category is determined based on the type to which most of the neighbouring samples belong. Different similarity measure strategies and have an evident influence on the accuracy of the KNN classification.

The calculation process of the KNN algorithm is as follows:1) Calculate the distance between the test data and each training data; 2) Sort according to the increasing relationship of intervals; 3) Select the points with the nearest range; 4) Determine the frequency of occurrence of the category of the first points; 5) Showing the type with the highest rate among the top points as the prediction label of the test data. In this study, we determined the Manhattan distance, defined as Eq. (6), and 2 to establish KNN classifier based on grid search optimization:

where and represent two different samples in the n-dimensional space, respectively.

3. Results

3.1. Datasets

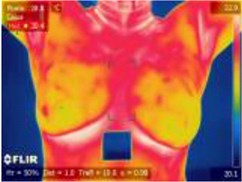

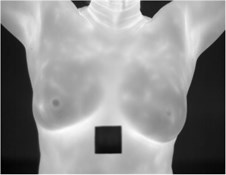

The data comes from a set of public datasets obtained according to specific image acquisition protocols. Each participant is captured an average of 27 images, where the static protocol provides a different shooting position(e.g., Front, Right Lateral 45°, Right Lateral 90°, Left Lateral 45° and Left Lateral 90°) and the dynamic protocol generates the front position of the 20 sequential images and the other 2 lateral images (e.g., Right Lateral 90°, Left Lateral 90°). Details of data acquisition protocols and data sets are documented in the literature [11]. The data obtained by the dynamic acquisition scheme can more effectively highlight the hot regions and vascularization of the breast than the static. We select the image data obtained by the dynamic protocol, of which the resolution is 680×480. As a result of our image would be collected through the infrared phone, the resolution will be reduced to 160×120, consistent with the infrared camera of the FLIR Lepton 3. The resolution conversion is shown in Fig. 2.

The feature data extracted by the above method are divided into a training set and a test set as shown in Table 1 and the training set is further divided into two parts of training and verification, which are suitable for grid search and cross-validation methods, to get a better performance classifier.

Fig. 2a) A infrared image with the resolution of 680×480, b) a grayscale image with the resolution of 680×480 and c) a grayscale image with the resolution of 160×120

a)

b)

c)

Table 1The details of the data set partitioned

Label | Train set | Test set | Total | |

Train | Validation | |||

Healthy | 380 | 190 | 190 | 760 |

Cancer | 380 | 190 | 190 | 760 |

Total | 760 | 380 | 190 | 1520 |

3.2. Diagnosis results

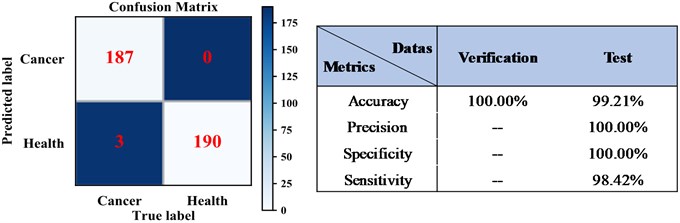

The following parameters are adopted to evaluate the performance, where Ture positives (TP) means the number of abnormalities diagnosed in patients, Ture negative (TN) is the number of normal people diagnosed as normal, False positives (FP) indicates the number of healthy people misdiagnosed as abnormal and False negative (FN) is equivalent to the number of patients missed. The metric used in this paper refers to [12]. The results of breast cancer detection of KNN can be seen in Fig. 3, where the values of TP, TN, FP, and FN are 187, 190, 0, and 3, respectively.

Fig. 3The confusion matrix of KNN detection results

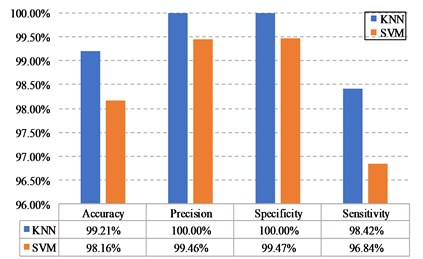

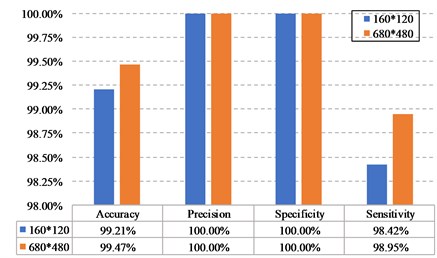

We compared the detection results of the system under different classifiers and resolutions, and the result is shown in the in Fig. 4.

Both the two classifiers performed satisfactorily, and the KNN classifier has an accuracy of 99.21 %, which is even better than the 98.16 % of the SVM. We also compared the detection results of the system, one is raw data resolution and the other is smartphone infrared camera. The accuracy of KNN classifier is 99.47 % with the resolution of 680×480, and the value is reduced to 99.21 % after the resolution becomes 160×120. The method of artificially reducing the image resolution has a slightly weak influence on the detection result and could meet the requirements.

Besides, we compared the results of different methods with the same datasets, as shown in Table 2, and concluded that the proposed method has higher diagnostic accuracy and stability than the previous studies.

Fig. 4a) The detection results obtained from the test set with different classifiers, b) the detection results of the system under various resolutions.

a)

b)

Table 2The result comparison of different methods

Methods | Accuracy | Precision | Specificity | Sensitivity |

The proposed method | 99.21 % | 100 % | 100 % | 98.4 % |

Wavelet analysis and feed-forward neural network (FNN) [12] | 90.48 % | – | 89.73 % | 87.6 % |

Representation learning and texture analysis [13] | 95.8 % | 94.6 % | – | – |

Convolutional Neural Networks [14] | 93 % | 95 % | 96 % | 91 % |

Statistical features and FNN [15] | 89.4 % | – | 90 % | 86 % |

4. Conclusions

In this paper, a portable breast cancer detection system based on smartphone with infrared camera is proposed. The method achieves the accuracy of 99.21 % with KNN, owning a superior performance than traditional methods. Meanwhile, with the rapid development of smartphones incorporating advanced digital camera technology, it will be more accessible for early screening of breast cancer, relieving expensive costs, tight technical limitations and scarce medical resources. As a non-invasive, non-radiative, low-cost auxiliary screening tool, it will effectively expand the approach to diagnose and treat breast cancer and other diseases. In the future, the possible work is to standardize specific image acquisition strategies to ensure the reliability and accuracy of diagnostic results as high as possible.

References

-

Ibrahim A., Mohammed S., Ali H. A. Breast cancer detection and classification using thermography: a review. International Conference on Advanced Machine Learning Technologies and Applications, Vol. 723, 2018, p. 496-505.

-

Zemouri R., Omri N., Devalland C., Arnould L., Morello B., Zerhouni N., Fnaiech F. Breast cancer diagnosis based on joint variable selection and constructive deep neural network. IEEE 4th Middle East Conference on Biomedical Engineering, 2018, p. 159-164.

-

Fan L., Strasser Weippl K., Li J. J., Louis J. S., Finkelstein D. M., Yu K. D., Chen W. Q., Shao Z. M., Goss P. E. Breast cancer in China. Lancet Oncology, Vol. 15, Issue 7, 2014, p. 279-289.

-

Sarigoz T., Ertan T., Topuz O., Sevim Y., Cihan Y. Role of digital infrared thermal imaging in the diagnosis of breast mass: a pilot study: Diagnosis of breast mass by thermography. Infrared Physics and Technology, Vol. 91, 2018, p. 214-219.

-

Zemouri R., Omri N., Morello B., Devalland C., Arnould L., Zerhouni N., Fnaiech F. Constructive deep neural network for breast cancer diagnosis. IFAC-PapersOnLine, Vol. 51, Issue 27, 2018, p. 98-103.

-

Zemouri R., Devalland C., Valmary Degano S., Zerhouni N. Intelligence artificielle: quel avenir en anatomie pathologique? Annales de Pathologie, Elsevier, 2019, p. 119-129.

-

Schaefer G., Závišek M., Nakashima T. Thermography based breast cancer analysis using statistical features and fuzzy classification. Pattern Recognition, Vol. 42, Issue 6, 2009, p. 1133-1137.

-

Gogoi U. R., Majumdar G., Bhowmik M. K., Ghosh A. K., Bhattacharjee D. Breast abnormality detection through statistical feature analysis using infrared thermograms. International Symposium on Advanced Computing and Communication, 2015, p. 258-265.

-

Haralick R. M., Shanmugam K. Textural features for image classification. IEEE Transactions on Systems, Man, and Cybernetics, Vol. 3, Issue 6, 1973, p. 610-621.

-

Sánchez J. S., Mollineda R. A., Sotoca J. M. An analysis of how training data complexity affects the nearest neighbor classifiers. Pattern Analysis and Applications, Vol. 10, Issue 3, 2007, p. 189-201.

-

Silva L. F., Saade D. C. M., Sequeiros G. O., Silva A. C., Paiva A. C., Bravo R. S., Conci A. A new database for breast research with infrared image. Journal of Medical Imaging and Health Informatics, Vol. 4, Issue 1, 2014, p. 92-100.

-

Pramanik S., Bhattacharjee D., Nasipuri M. Wavelet based thermogram analysis for breast cancer detection. International Symposium on Advanced Computing and Communication, 2015, p. 205-212.

-

Abdel Nasser M., Moreno A., Puig D. Breast cancer detection in thermal infrared images using representation learning and texture analysis methods. Electronics, Vol. 8, Issue 1, 2019, p. 100.

-

Baffa M. D. F. O., Lattari L. G. Convolutional neural networks for static and dynamic breast infrared imaging classification. 31st SIBGRAPI Conference on Graphics, Patterns and Images, 2018, p. 174-181.

-

Pramanik S., Banik D., Bhattacharjee D., Nasipuri M. A Computer-Aided Hybrid Framework for Early Diagnosis of Breast Cancer. Advanced Computing and Systems for Security, Springer, 2019, p. 111-124.

Cited by

About this article

This research is supported by the National Natural Science Foundation of China (Grant Nos. 51605014, 61803013, 51105019 and 51575021) and the Fundamental Research Funds for the Central Universities (Grant No. YWF-19-BJ-J-177).